United States Patent and Trademark Office

Patent Quality Forum Series

Washington DC * Milwaukee, WI

Kansas City, MO * Portland, OR * Baton Rouge, LA

November 3-16, 2016

| • | Master Review Form – Background | ○ | USPTO has a long history of reviewing its own work | ■ | Office of Patent Quality Assurance (OPQA) | | ■ | Regular supervisor reviews | | ■ | ther formal review programs | | ■ | Informal feedback |

| | ○ | Reviews, using different formats, focused on correctness and provided feedback on clarity | | ○ | Review data was routinely analyzed separately |

|

| • | MRF Program Goals | ○ | To create a single, comprehensive tool (called the Master Review Form) that can be used by all areas of the Office to consistently review final work product | | ○ | To better collect information on the clarityand correctness of Office actions | | ○ | To collect review results into a single data warehouse for more robust analysis |

|

| • | MRF Iteration and Implementation | ○ | Developed Version 1.0 and deployed in OPQA November, 2015 | ■ | Trained reviewers for consistent usage of the extensive form | | ■ | Obtained internal feedback |

| | ○ | Published Federal Register Notice with Version 1.0 and collected comments March-May, 2016 | | ○ | Developed Version 2.0 and deployed in OPQA June, 2016 | ■ | Technology Centers began using the form July, 2016 |

|

|

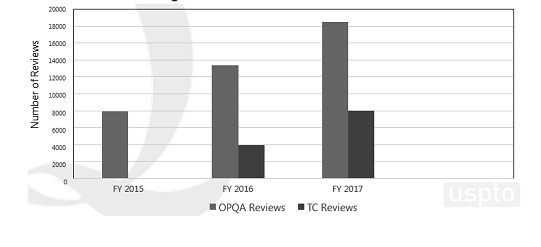

| • | MRF Reviews are Increasing

|

| |

|

| • | MRF Looking Forward | ○ | The MRF’s single data warehouse facilitates: | ■ | Better quality metrics | • | Higher number of reviews | | • | More complete reviews |

| | ■ | Case studies without the need of directed, ad hoc reviews | | ■ | Rapid measurement of the impact due to training, incentives, or other quality programs on our work product | | ■ | Quality monitoring tools, such as dashboards |

| | ○ | Linking MRF data to Big Data |

|

| • | Improving Clarity and Reasoning – ICR Training Program Goals | ○ | To identify particular areas of prosecution that would benefit from increased clarity of the record and develop training | | ○ | To enhance all training to include tips and techniques for enhancing the clarity of the record as an integral part of ongoing substantive training |

|

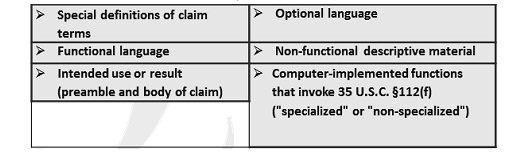

| • | ICR Training Courses | ○ | 35 U.S.C. 112(f): Identifying Limitations that Invoke § 112(f) | | ○ | 35 U.S.C. 112(f): Making the Record Clear | | ○ | 35 U.S.C. 112(f): Broadest Reasonable Interpretation and Definiteness of § 112(f) Limitations | | ○ | 35 U.S.C. 112(f): Evaluating Limitations in Software-Related Claims for Definiteness under 35 U.S.C. 112(b) | | ○ | Broadest Reasonable Interpretation (BRI) and the Plain Meaning of Claim Terms o Examining Functional Claim Limitations: Focus on Computer/Software- related Claims o Examining Claims for Compliance with 35 U.S.C. 112(a): Part I Written Description | | | | | ○ | Examining Claims for Compliance with 35 U.S.C. 112(a): Part II – Enablement | | ○ | 35 U.S.C. 112(a): Written Description Workshop | | ○ | § 112(b): Enhancing Clarity By Ensuring That Claims Are Definite Under 35 U.S.C. 112(b) | | ○ | 2014 Interim Guidance on Patent Subject Matter Eligibility | | ○ | Abstract Idea Example Workshops I & II | | ○ | Enhancing Clarity By Ensuring Clear Reasoning of Allowance Under C.F.R. 1.104(e) and MPEP 1302.14 | | ○ | 35 U.S.C. 101: Subject Matter Eligibility Workshop III: Formulating a Rejection and Evaluating the Applicant’s Response | | ○ | 35 U.S.C. 112(b): | | ○ | Interpreting Functional Language and Evaluating Claim Boundaries – Workshop | | ○ | Advanced Writing Techniques utilizing Case Law |

|

| • | Stakeholder Training on Examination Practice and Procedure (STEPP) | ○ | 3-Day training on examination practice and procedure for patent practitioners | | ○ | Provide external stakeholders with a better understanding of how and why an examiner makes decisions while examining a patent application | | ○ | Aid in compact prosecution by disclosing to external stakeholders how examiners are taught to use the MPEP to interpret an applicant’s disclosure |

|

| • | STEPP Course Schedule

|

| • | Training Resources | ○ | All examiner training, including the above ICR Training, is publicly available | | | | | ○ | Stakeholder Training on Examination Practice and Procedure (STEPP) launched July 12th |

|

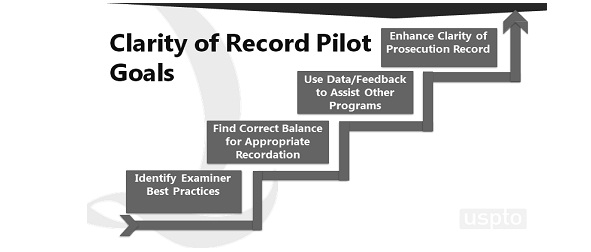

| • | Clarity of Record Pilot – Purpose | ○ | This program is to develop best Examiner practices for enhancing the clarity of various aspects of the prosecution record and then to study the impact on the examination process of implementing these best practices. |

|

| • | Clarity of Record Pilot Goals

|

| • | Clarity of Record Pilot - Areas of Focus | ○ | More detailed interview summaries | | ○ | Enhanced documentation of claim interpretation

| | ○ | More precise reasons for allowance | | ○ | Pre-search interview - Examiner’s option |

|

| • | Clarity of Record Pilot – Participants | ○ | 125 Examiners participated | ■ | Advanced Training | | ■ | Met regularly | | ■ | Recorded time spent |

| | ○ | 45 Supervisors (SPEs) participated | ■ | Managed program | | ■ | Provided reviews | | ■ | Provided direct assistance |

|

|

| • | Clarity of Record Pilot – Evaluation | ○ | 2,600 Office actions (reviewed and recorded) | ■ | Included a statistical mix of: | • | Pre-Pilot Office actions | | • | Pilot Office actions | | • | Control group |

|

| | ○ | Key Drivers were determined | | ○ | Best practices were gathered |

|

| • | Results and Recommendations – Interview Summaries | ○ | Identified Best Practices/Key Drivers: | ■ | Adding the substance of the Examiner’s position | | ■ | Providing the details of an agreement, if reached | | ■ | Including a description of the next steps that will follow the interview |

| | ○ | Recommendations: | ■ | Provide corps-wide training on enhancing the clarity of interview summaries that focuses on the identified best practices/key drivers | | ■ | Consider whether to require examiners to complete more comprehensive interview summaries | | ■ | Continue to evaluate Pilot cases to see whether improved interview summary clarity has a long-term impact on prosecution |

|

|

| • | Results and Recommendations – 112(f) Limitations | ○ | Identified Best Practices/Key Drivers: | ■ | Explaining 112(f) presumptions and how the presumptions were overcome (when applicable) | | ■ | Using the appropriate form paragraphs | | ■ | Identifying in the specification the structure that performs the function |

| | ○ | Recommendation: | ■ | Consider whether to require examiners to use the 112(f) form paragraph |

|

|

| • | Results – 102 and 103 Rejections (Claim Interpretation) | ○ | Identified Best Practices/Key Drivers: | ■ | Clearly addressing all limitations in 35 U.S.C. 102 rejections when claims were group together | | ■ | Explaining the treatment of intended use and non-functional descriptive material limitations in 35 U.S.C. 103 rejections |

| | | | | ○ | Overall Pilot Determination: | ■ | Examiners currently doing a good job with clarity in claim interpretation |

|

|

| • | Results and Recommendations – 102 and 103 Rejections (Claim Interpretation) | ○ | Key Drivers that Added to and Detracted FromClarity: | ■ | Providing, in 35 U.S.C. 102 rejections, an explanation for limitations that have been identified as inherent | | ■ | Providing, in 35 U.S.C. 103 rejections, annotations to pin-point where each claim limitation is met by the references |

| | ○ | Recommendation: | ■ | Assess how to use the identified best practice of recording claim interpretation to improve the clarity of Office actions without detracting from clarity |

|

|

| • | Results and Recommendations – Reasons for Allowance | ○ | Identified Best Practices/Key Drivers: | ■ | Identify specific allowable subject matter or where found, if earlier presented, during prosecution | | ■ | Confirm applicant’s persuasive arguments | | ■ | Address all independent claims |

| | ○ | Recommendations: | ■ | Provide training on best practices | | ■ | Require more comprehensive reasons for allowance |

|

|

| • | Results – Additional Practices | ○ | Identified Best Practice: | ■ | Pilot Examiners shared best practices with non-Pilot Examiners |

| | ○ | Practices that did NOT significantly impact overall clarity: | ■ | Providing an explanation regarding the patentable weight given to a preamble | | ■ | Providing an explanation of how relative terminology in a claim is being interpreted | | ■ | Providing an explanation for how a claim limitation that was subject to a rejection under 35 U.S.C. 112(b) has been interpreted for purposes of applying a prior art rejection |

|

|

| • | Clarity of the Record – Next Steps | ○ | Surveys | ■ | Internal surveys sent to Pilot examiners | | ■ | Data currently being collected |

| | ○ | Quality Chat | ■ | Gather information/thoughts on any differences seen during Pilot time period | | ■ | Share data results of Pilot | | | | | ■ | Discuss/share best practices |

| | ○ | Focus Sessions | ■ | Are best practices still being used? | | ■ | Discuss amended cases resulting from Pilot |

| | ○ | Monitor Pilot Treated Cases | ■ | Are applicant’s arguments more focused? | | ■ | Average time to disposal compared to pre- pilot cases? |

| | ○ | Recommendations | ■ | Discuss implementation of training and best practices in all Technology Centers | | ■ | Consider further efforts to enhance claim interpretation including key drivers that did not significantly impact clarity | | ■ | Expand Pilot to gather additional data |

| | ○ | http://www.uspto.gov/patent/initiatives/clarity-record-pilot |

|

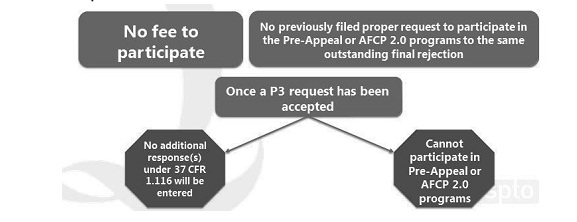

| • | Post-Prosecution Pilot (P3) – Goal | ○ | Developed to impact patent practice during the period subsequent to final rejection and prior to the filing of a notice of appeal | | ○ | Adding to current programs: | ■ | After final Consideration Pilot (AFCP 2.0) | | ■ | Pre-appeal Brief Conference Pilot |

|

|

| • | Post-Prosecution Pilot (P3) – Overview | ○ | Retains popular features of the Pre-appeal Brief Conference Pilot and AFCP 2.0 programs: | ■ | Consideration of 5-pages of arguments | | ■ | Consideration of non-broadening claim amendments | | ■ | Consideration by a panel |

| | ○ | Adds requested features: | ■ | Presentation of arguments to a panel of examiners | | ■ | Explanation of the panel’s recommendation in a written decision after the panel confers |

|

|

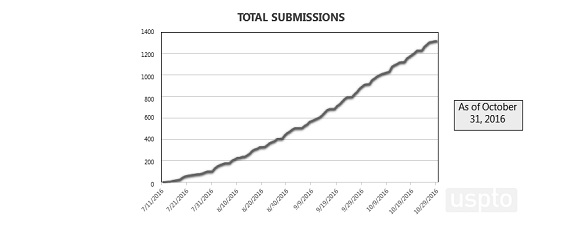

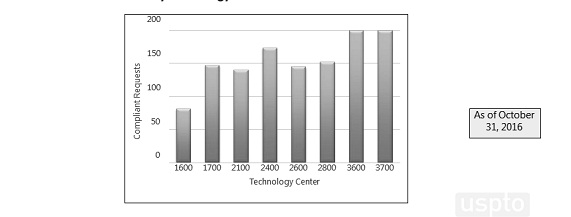

| • | Post-Prosecution Pilot (P3) – Begins | ○ | Federal Register Notice (81 FR 44845) began the Pilot on July 11, 2016 | | ○ | Runs six (6) months or upon receipt of 1,600 compliant requests, whichever occurs first | ■ | –200 per Technology Center |

| | ○ | Formal comments about P3 will be received through November 14, 2016 at AfterFinalPractice@uspto.gov |

|

| |

|

| • | P3 Pilot – Requirements

|

| • | P3 Pilot Participation | ○ | Open to nonprovisional and international utility applications filed under 35 USC 111(a) or 35 USC 371 that are under final rejection. | | ○ | The following are required for pilot entry: | ■ | A request, such as in PTO/SB/444, must be filed via EFS-Web within 2 months of the mail date of the final rejection and prior to filing notice of appeal | | ■ | A statement that applicant is willing and available to participate in P3 conference with the panel of examiners | | ■ | A response comprising no more than five (5) page of arguments under 37 CFR 1.116 to the outstanding final rejection, exclusive of any amendments | | ■ | Optionally, a proposed non-broadening amendment to one (1) or more claim(s) |

|

|

| • | P3 Pilot – Request Compliance | ○ | For requests considered timely and compliant, the application entered into the pilot process. | | ○ | For requests considered untimely or non- compliant (or if filed after the technology center has reached its limit): | ■ | – The Office will treat the request as any after final response absent a P3 request. | | ■ | – No conference will be held. |

|

|

| |

|

| • | P3 Pilot – Process

|

| • | P3 Pilot - Notice of Decision (PTO-2324) | ○ | Three possible outcomes are: | ■ | Final Rejection Upheld | • | The status of any proposed amendment(s) will be communicated | | • | The time period for taking further action will be noted |

| | ■ | Allowable Application | | ■ | Reopen Prosecution |

| | ○ | All of the above outcomes will include: | ■ | An Explanation of Decision | | ■ | A Survey |

|

|

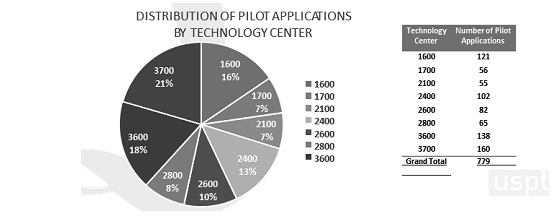

| • | P3 Pilot - Submissions to Date

|

| |

|

| • | P3 Pilot – Submissions by Technology

|

| • | P3 Pilot - Improper Requests

|

| • | P3 Pilot - Next Steps | ○ | Metrics for Consideration 1. | Internal and external survey results | 2. | Formal comments from FR Notice | 3. | Stakeholder feedback about the program from other sources |

| | ○ | Program Decision 1. | Continue the program, with modifications |

|

|

| • | More Information on P3 |

| • | Post Grant Outcomes Goal | ○ | This program is to develop a process for providing post grant outcomes from various sources to the examiner of record and the examiners of related applications. | ■ | Sources include: | • | the Federal Circuit, | | • | District Courts, | | • | Patent Trial and Appeal Board (PTAB), and | | • | Central Reexamination Unit (CRU). |

|

|

|

| • | Post Grant Outcomes – Objectives | ○ | Purpose: To learn from all post grant proceedings and inform examiners of their outcomes. 1. | Enhanced Patentability Determinations in Related Child Cases | • | Providing examiners with full access to trial proceedings submitted during PTAB post AIA Trials |

| 2. | Targeted Examiner Training | • | Data collected from the prior art submitted and examiner behavior will provide a feedback loop on best practices |

| 3. | Examining Corps Education | • | Provide examiners a periodic review of post grant outcomes focusing on technology sectors |

|

|

|

| • | Post Grant Outcomes - Objective 1 | ○ | Enhanced Patentability Determinations in Related Child Cases | • | Identify those patents being challenged at the PTAB under the AIA Trials that have pending related applications in the Patent Corps | | • | Provide the examiners of those pending related applications full access to the AIA trial proceedings of the parent case |

|

|

| • | Post Grant Outcomes Pilot | ○ | Post Grant Outcomes Pilot: April-August, 2016 | | ○ | Pilot participants included: | • | All examiners with a pending application related to an AIA trial |

| | ○ | Pilot participants: | • | Notified when they had an application | | | | | • | Provided full access to the trial proceedings | | • | Surveyed to identify best practices to be shared corps-wide |

|

|

| • | Post Grant Outcomes Pilot – Statistics by Technology

|

| • | Post Grant Outcomes Pilot – General Statistics

|

| |

|

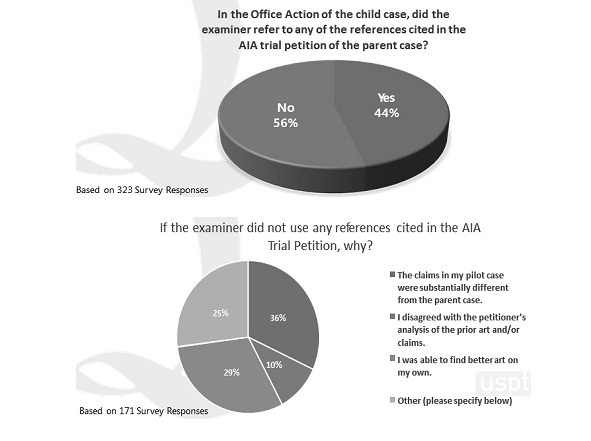

| • | Post Grant Outcomes Pilot – How References Were Used?

|

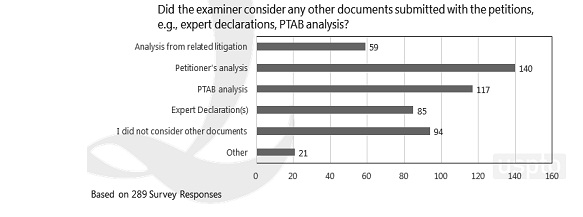

| • | Post Grant Outcomes Pilot – What Other PTAB Documents Were Used?

|

| • | Post Grant Outcomes - Objective 2 | ○ | Targeted Examiner Training | ■ | Data collected from the prior art submitted and resulting examiner behavior will provide a feedback loop on best practices | | ■ | Educate examiners on: | • | Prior art search techniques | | • | Sources of prior art beyond what is currently available | | • | Claim interpretation | | • | AIA Trial proceedings |

|

|

|

| |

|

| • | Post Grant Outcomes - Objective 3 | ○ | Examining Corps Education | ■ | Leverage results of all post grant proceedings to educate examiners on the process and results | • | Provide examiners a periodic review of post grant outcomes focusing on technology sectors | | • | Utilize the proceedings to give examining corps a fuller appreciation for the process |

|

|

|

| • | Post Grant Outcomes Summary | ○ | Learn from the results of post grant proceedings | | ○ | Shine a spotlight on highly relevant prior art uncovered in post grant proceedings | | ○ | Enhance patentability of determination of related child cases | | ○ | Build a bridge between PTAB and the examining corps |

|

| • | Post Grant Outcomes - Next Steps |

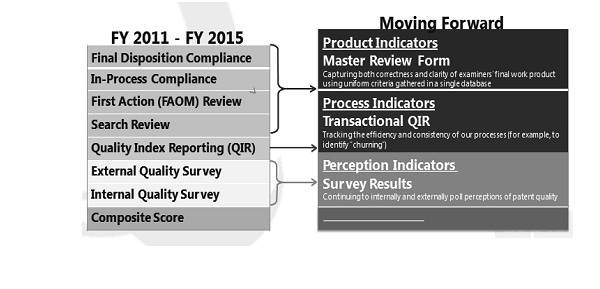

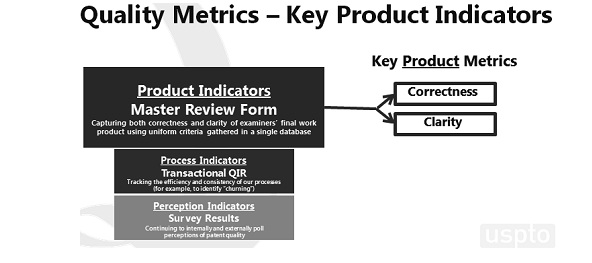

| • | Measuring Patent Quality at the USPTO | ○ | Primary focus has been onexamination quality | ■ | Examiners’ adherence to laws, rules, and procedures | | ■ | Tracked against some established standards for desired outcomes | • | Correctness – statutory compliance | | • | Clarity | | • | Consistency | | • | Reopening | | • | Rework | | • | Impacts on advancing prosecution |

| | ■ | Basis for historic “compliance” metrics reported by USPTO |

|

|

| • | Challenges in Measuring Quality | ○ | Objectivity vs. Subjectivity | | ○ | Leading vs. Lagging indicators | ■ | What we are doing rather than what we did |

| | | | | ○ | Controlling for a wide range of factors | ■ | e.g. technology, examiner experience, applicant behavior, and pilot programs | | ■ | Establishing causal effects |

| | ○ | Balloon-effect of pushing quality could results in problems elsewhere | | ○ | Verification and validation of quality metrics | | ○ | There is no silver bullet | | ○ | Uniqueness of what we do |

|

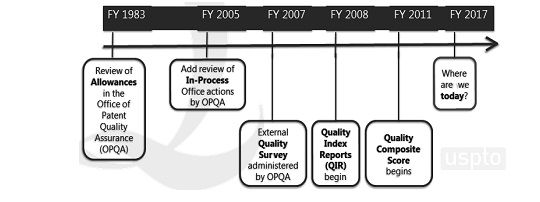

| • | An Historical Perspective on Measuring Patent Quality

|

| • | Overview of the Office of Patent Quality Assurance (OPQA) | ○ | Review Quality Assurance Specialists (RQAS) | ■ | 65 reviewers | | ■ | Average of 20 years of patent examination experience | | ■ | Demonstrated skills in production, quality, and training | | ■ | Assignments based on technology |

| | ○ | Major activities | ■ | Review of examiner work product | | ■ | Coaching and mentoring | | ■ | Practice and procedure training | | ■ | Program evaluations, case studies, ad hoc analyses |

|

|

| • | Scope of OPQA Review | ○ | Where do we review? | ■ | Mailed Office actions | • | Non-final rejections, Final rejections, and Allowances |

|

| | ○ | How do we select what is reviewed? | ○ | Random sampling | • | Primary factors in sample size determination | ○ | Desired precision | | ○ | How data will be used | | | | | ○ | Resources necessary for data collection |

|

| | ○ | Maintain representativeness |

|

|

| • | Quality Metrics as an EPQI Program |

| • | Quality Metrics – Feedback | ○ | Feedback from Federal Register Notice – 32 submissions received | ■ | 6 submissions by Intellectual Property Organizations | | ■ | 1 submission by Law Firms | | ■ | 4 submissions by Companies | | ■ | 21 submissions by Individuals (18 unique individuals) |

|

|

| • | . Quality Metrics – Redefined

|

| |

|

| • | Quality Metrics – Key Product Indicators

|

| • | Key Product Indicators – Correctness | ○ | Correctness metrics will show compliance rate by statute | | ○ | Compliance Rate = Total Reviews – Non-Compliant Reviews

Total Reviews | | ○ | Non-Compliant Reviews = Omitted + Improper Rejections | | ○ | The total number of reviews will remain constant for all statutes and includes those reviews that USPTO’s Office of Patent Quality Assurance conducts on randomly-sampled Office actions |

|

| • | Key Product Indicators – Clarity | ○ | The USPTO is working on developing clarity metrics | | ○ | The Office is continuing to work on ensuring that the MRF captures clarity data as | | ○ | accurately as possible o The USPTO is analyzing the MRF’s clarity data for purposes of identifying quality trends |

|

| |

|

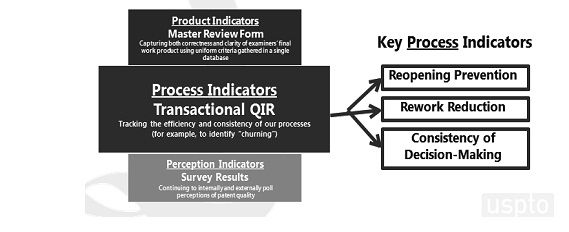

| • | Quality Metrics – Key Process Indicators

|

| • | Key Process Indicators – Approach | ○ | Focus on three process indicators from our Quality Index Report (QIR) | ■ | Reopening Prevention | | ■ | Rework Reduction | | ■ | Consistency of Decision Making |

| | ○ | Use data to identify outliers for each indicator for further root-cause analysis | | ○ | Based on root-cause analysis, work to either capture any identified best-practices or train examiners, as appropriate |

|

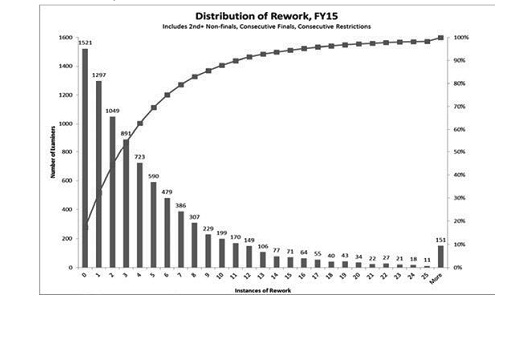

| • | Metrics Example – Rework Reduction

| ○ | Metric is sum of transactional QIR data points including consecutive finals, consecutive restrictions, and 2nd+ non-finals | | ○ | Note: Instances of rework impacted by Alice Corp. v. CLS Bank decision |

|

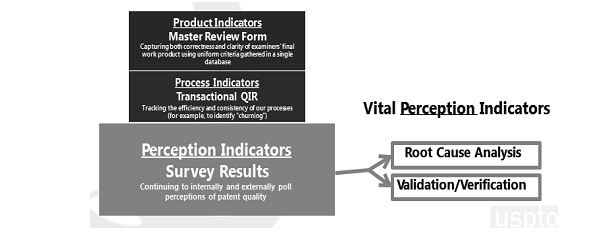

| • | Quality Metrics – Key Perception Indicators

|

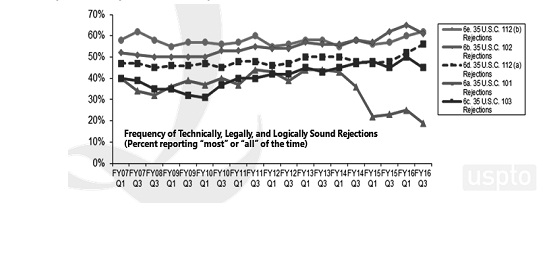

| • | Key Perception Indicators – Approach | ○ | USPTO has conducted internal and external perception surveys semi-annually since 2006 | ■ | External survey is of 3,000 frequent-filing customers | | ■ | Internal survey is of 750 randomly selected patent examiners |

| | ○ | The survey results will be used to validate other quality metrics |

|

| • | Perception Survey Results – Example

|

| |

|

| • | Quality Metrics – Next Steps | ○ | Publish Compliance Targets | | ○ | Publish Clarity Data and Process Indicators | | ○ | Action Plans on Process Indicators | | ○ | Evaluate Perception Indicators |

|